Digital Ocean

With the ever-increasing interest in SONAR system applications for the detection, monitoring and classification of acoustic targets and the communication with nearby participants, a plethora of different scenarios and configurations have to be considered. Manually testing these setups by deploying them in real ocean-, port- or water-tank environments can be both cost- and time intensive. As a result of this, the slow feedback loops can slow down the development of algorithms and approaches for modern SONAR systems. By building a "digital twin" of the water column and its common inhabiting acoustic targets, rapid feedback and therefore faster development and research on SONAR applications is made possible.

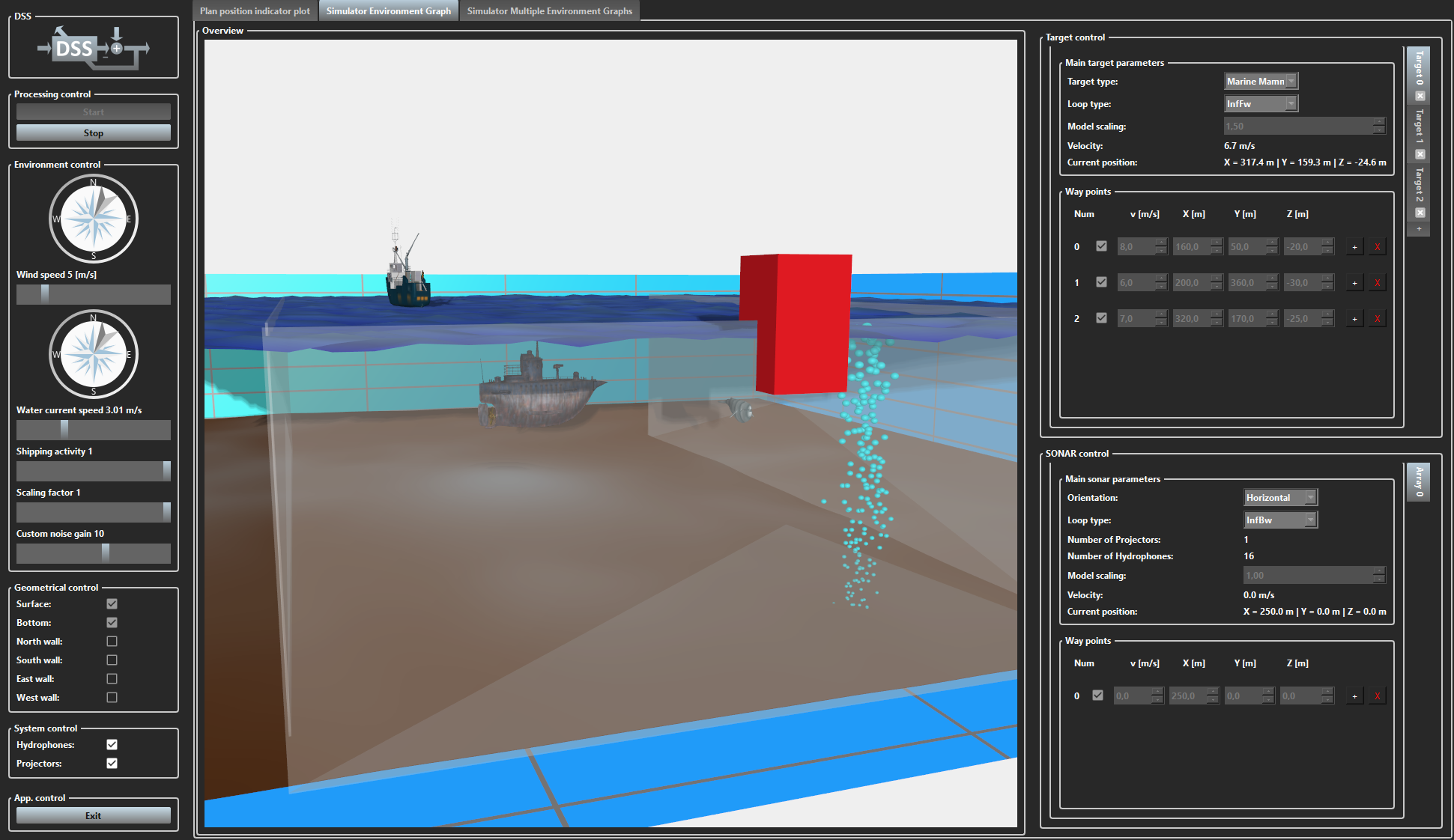

This demo presents such a simulated ocean environment ("digital ocean") for SONAR applications, encompassing multiple simulated acoustic targets such as ships, walls, mammals, and bubbles. Each target is placed inside a 3D environment, each having its own trajectory and frequency response to model backscatter behavior for reflections of acoustic waves. Furthermore, each target can also act as an active sound source, emitting acoustic signals from its own location. These targets are initialized with respect to the specified parameters of the digital ocean configuration in the initialization phase. Further targets can be dynamically added during runtime.

User interface of the environment simulation.

Dynamic environment processes and effects such as the simulation of the ocean surface, environment noise or wind- and water currents are also available. Their properties, such as directions of currents or the strength of the noise, are adjustable in real-time through the GUI or a priori in the digital ocean configuration. Therefore, different environment scenarios can be designed and evaluated.

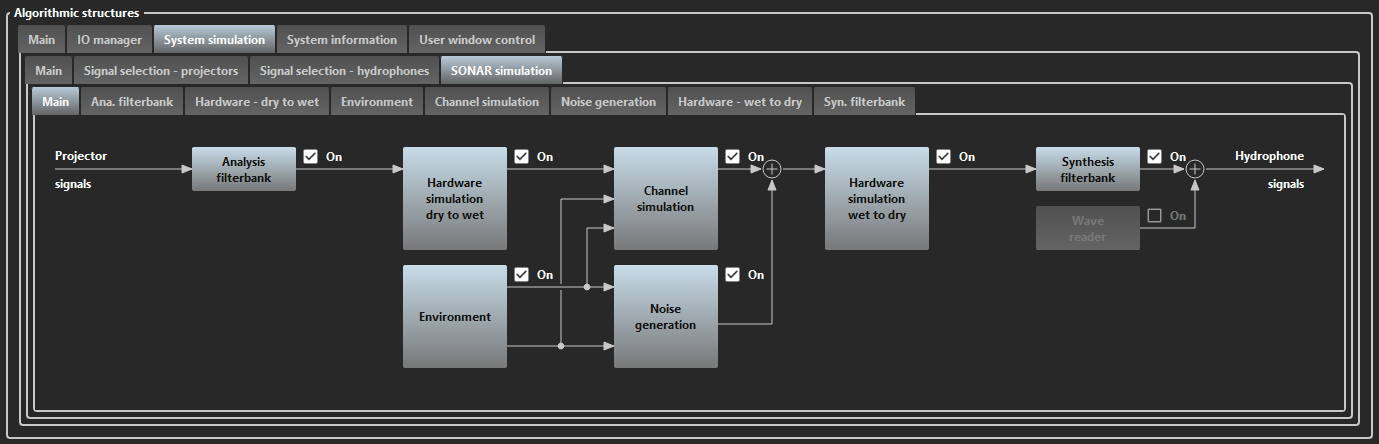

The SONAR simulation processing for the projector signals happens in the frequency domain as seen below. The transformed signals are propagated through a processing chain, emulating the propagation of acoustic waves through the configured environmental channels.

System simulation processing chain GUI in KiRAT.

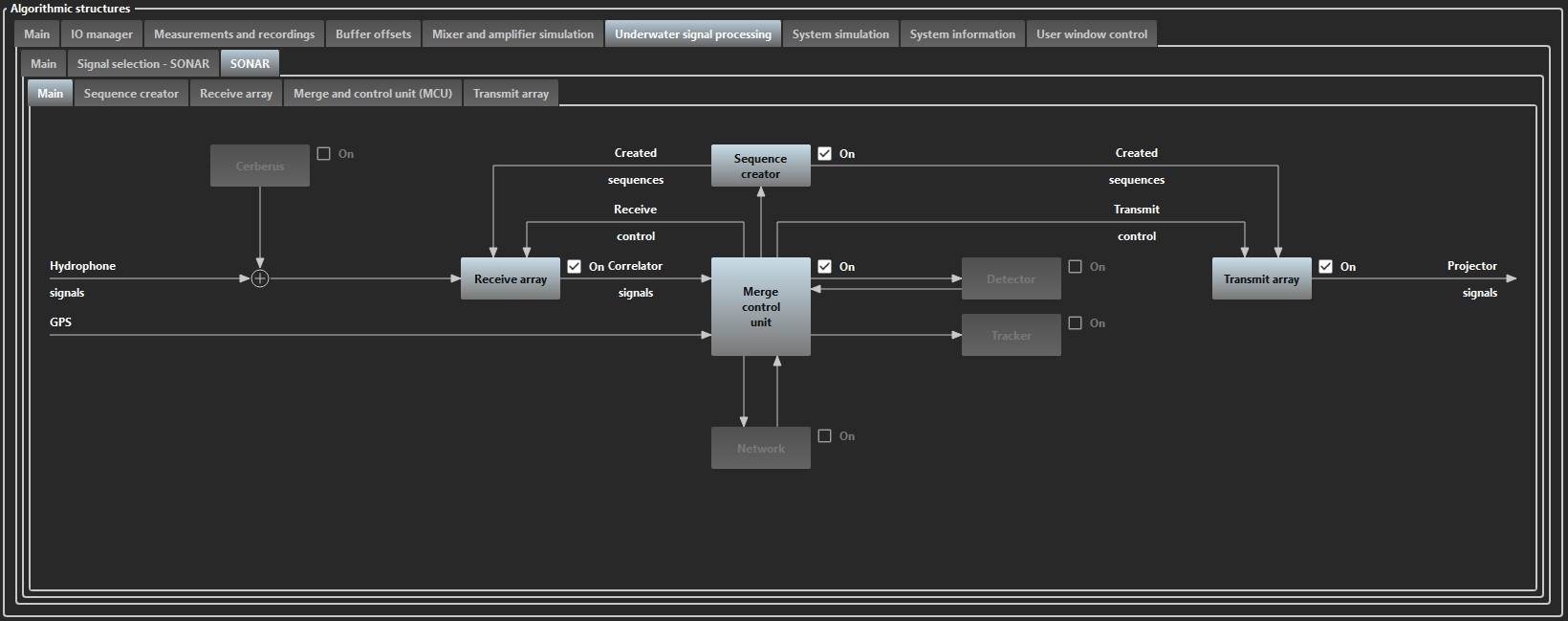

The actual underwater signal processing occurs within the SONAR processing chain shown below. The hydrophone signals may originate from either real hydrophone recordings or simulated environmental signals, as the SONAR processing is decoupled from the simulation process. Consequently, the same processing can be flexibly applied to a simulated environment (running as a second KiRAT instance on another PC) or connected to the hydrophone output during real-world evaluations. The projector transmit sequences are also generated within this chain.

SONAR processing chain GUI in KiRAT.

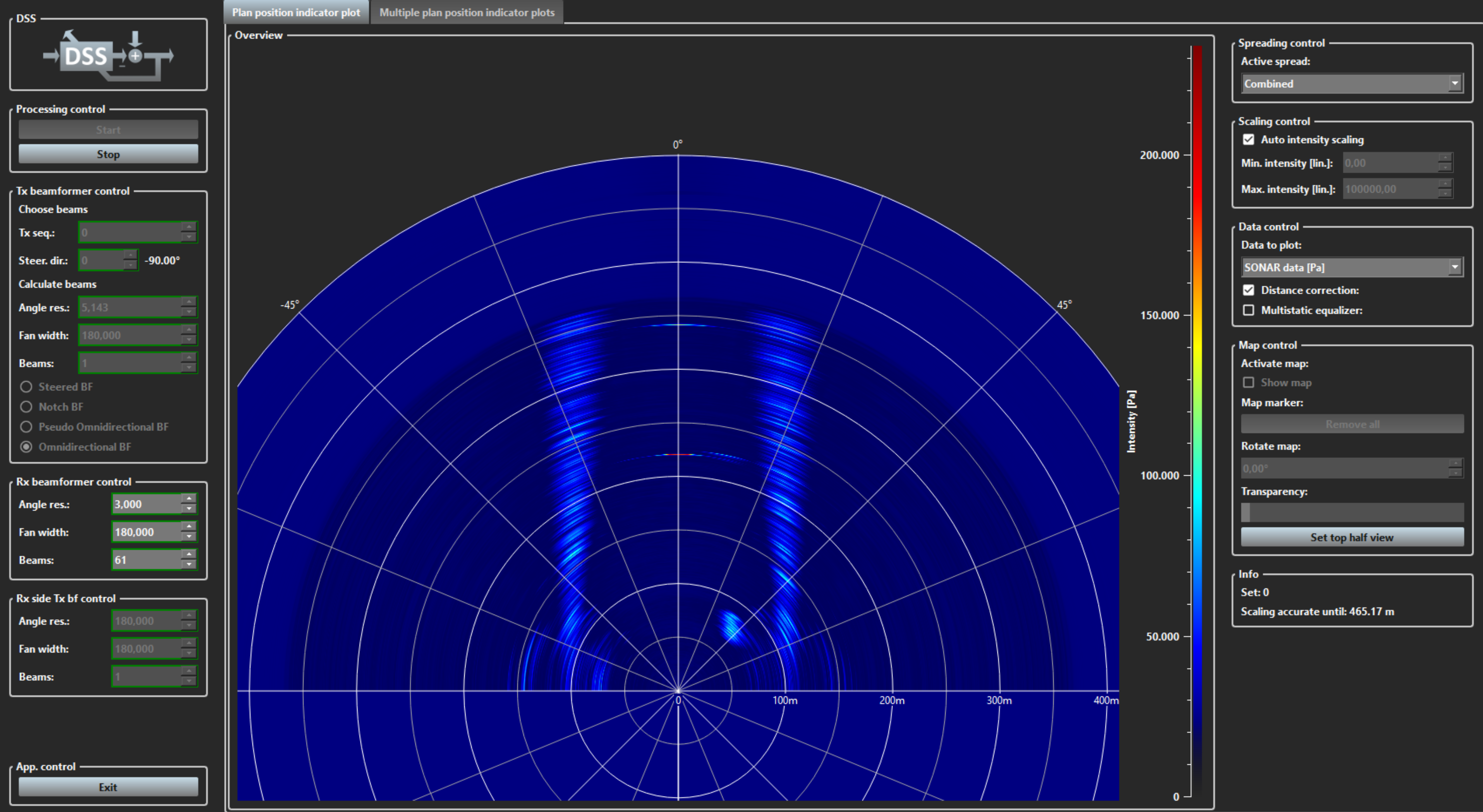

The hydrophone signals are correlated with the transmit sequences to generate the echogram displayed in the SONAR operator user interface shown below. Both the transmit-side and receive-side beamformer settings can be adjusted here, along with other parameters such as spread control, scaling, and the selection of data to be plotted.

User interface of the SONAR operator.

Due to its adaptability and accurate real-time feedback on the environmental state, this digital ocean also enables autonomous agent–environment interactions for AI training purposes, such as training a reinforcement learning agent. By dynamically responding to changes in SONAR scan parameters, an AI agent can independently explore and infer the environment's dynamics without the need for manual labeling. As a result, it can learn to adapt to its observed environment by adjusting system parameters to achieve a desired objective. For example, in the detection and classification of rising methane gas bubbles in the water column, such an objective could be maximizing the signal-to-noise ratio (SNR) of the bubble targets in the received scans.